Runway has introduced Aleph, an in‑context video model built to edit and transform existing footage with natural language prompts, marking a notable shift in AI-assisted post-production. The company’s research overview positions Aleph as a multi‑task system that can add, remove, and restyle elements, generate new camera angles, and maintain continuity across frames, directly from user guidance. Read the announcement.

A Model Aimed at Real Scenes, Not Just Synthetic Clips

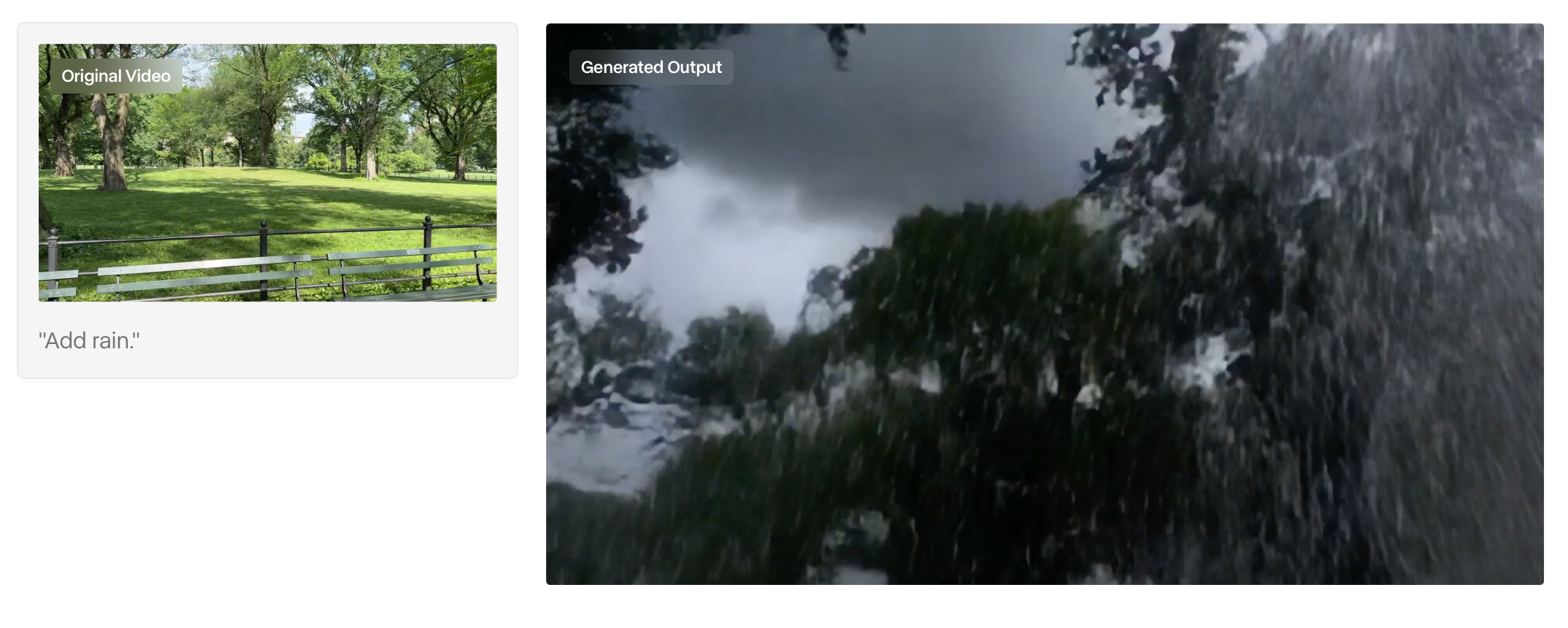

Unlike earlier creative models that largely focused on generating short clips from scratch, Aleph is expressly aimed at modifying real-world footage. Runway’s materials describe a system that parses scene geometry, materials, lighting, motion, and continuity to deliver edits that remain consistent over time. In practice, that means weather changes, relighting, object substitutions, and even entirely new camera angles can be requested in plain language, with the output designed to respect the physics and composition of the original take.

Aleph’s headline is not another proof-of-concept demo, it is the promise of single‑model, multi‑task editing that treats your footage as a coherent 3D scene and responds to direction as if it were a collaborator on set.

What’s New and Noteworthy

The model’s scope spans several categories that traditionally require multiple tools and teams. Notable capabilities include:

- Object and subject operations: Add, remove, replace, or transform objects and subjects while preserving scene lighting and perspective.

- Environmental and style shifts: Change weather, season, time of day, and stylistic treatment without reshoots.

- Camera re‑framing: Generate fresh angles of the same moment, from wide shots to aerial perspectives, derived from the scene’s inferred structure.

- Continuity‑aware edits: Maintain identity and motion consistency across frames when altering wardrobe, age, or appearance.

- Background removal: Perform clean extractions without a green screen to enable compositing.

Runway emphasizes that these tasks operate within a single, promptable system, no plug‑in hopping, and no manual keying or rotoscoping for common composites, bringing the editorial and VFX phases meaningfully closer together. Documentation from Runway outlines the model’s intended behavior and scope across these edit types. See the Aleph overview.

Access and Rollout

Runway indicates a phased rollout for Aleph, prioritizing professional accounts before broader availability. The company’s support notes point to access via generative sessions in both Chat Mode and Tool Mode, reflecting two approaches to creative direction, conversational iteration or tight parameter control. Read the access details.

Aleph at a Glance

| Category | Details (as documented) |

|---|---|

| Model Focus | In‑context video editing and generation across multiple tasks in one system |

| Core Capabilities | Add/remove/replace objects and subjects; style and lighting changes; weather/season/time shifts; background removal; new camera angles |

| Modes | Chat Mode (iterative, conversational); Tool Mode (granular control) |

| Output Duration | Up to 5 seconds per generation (documented limit) |

| Cost Metric | 15 credits per second (documented rate) |

| File Constraints | Input file size up to 64 MB |

| Resolutions/Formats | Common aspect ratios including 16:9, 9:16, 1:1, 4:3, 3:4, and 21:9 with documented pixel dimensions |

| Intended Users | Enterprise and professional creators in early access; broader rollout planned |

These parameters are designed to enable rapid iteration and review cycles. While short outputs may sound constrained in traditional editorial terms, the approach aligns with modern AI workflows that favor frequent, targeted generations to establish look and direction before scaling up delivery.

Industry Signals Around Launch

- Gen:48 Aleph Edition: A timed, 48‑hour short‑film challenge running August 23–25, 2025, requires participants to create a 1–4 minute film incorporating Aleph. The event is structured to stress‑test both creative workflows and model reliability in a public setting. Event details.

- Media partnerships: Runway announced a collaboration with AMC Networks to explore the use of its models across marketing and TV development pipelines, an indicator of interest from established media operators in AI‑assisted creative tooling. Partnership announcement.

These moves aim to validate the model’s utility beyond demos through competition constraints and professional use cases, while collecting feedback that can shape subsequent releases.

What Aleph Suggests About the Next Stage of AI Editing

By centering on in‑context understanding of real footage, Aleph underscores a trend that has been gathering pace across creative AI: systems that learn the specifics of a scene—geometry, materials, lighting dynamics—and then apply multi‑step edits as if they were composited within a physically consistent volume. The ability to request camera changes and stylistic shifts within the same sequence emphasizes a model of production where editorial, VFX, and look‑development converge earlier in the process.

That convergence could influence how teams plan shoots, with fewer contingency plates or pick‑ups, and more on‑set decisions deferred to post where edits are enacted at the scene level rather than the frame level. For studios and independent creators alike, the pathway from a rough assembly to a polished, stylized version of the same scene narrows, potentially shortening timelines and reshaping budget allocations.

The immediate question for studios: if a single model can handle relighting, weather swaps, object work, and re‑framing, how should post pipelines and tool stacks reorganize to exploit that consolidation?

Context and Constraints

While the promise is expansive, the initial technical parameters, such as the five‑second generation length and credit‑based pricing, illustrate the pragmatic boundaries of today’s models. Many professional workflows will adopt Aleph first for targeted shots: hero moments, transitions, and pickup sequences where its unique strengths deliver the biggest gains. As with other frontier models, throughput, latency, and version reliability will be closely watched by teams assessing where Aleph fits within broadcast and theatrical timelines.

Runway’s documentation signals ongoing iteration, with Chat Mode and Tool Mode intended to support different creative styles. The company frames Aleph as a long‑term platform rather than a single feature release, implying that capability breadth, duration limits, and fidelity are areas to watch as it matures.