OpenAI’s new coding model, GPT-5.3-Codex, is now generally available in GitHub Copilot and for a lot of teams, that’s the moment “AI helps me code” turns into “AI helps me ship.” The rollout is live across Copilot surfaces, with details in GitHub’s changelog post: GPT-5.3-Codex is now generally available for GitHub Copilot.

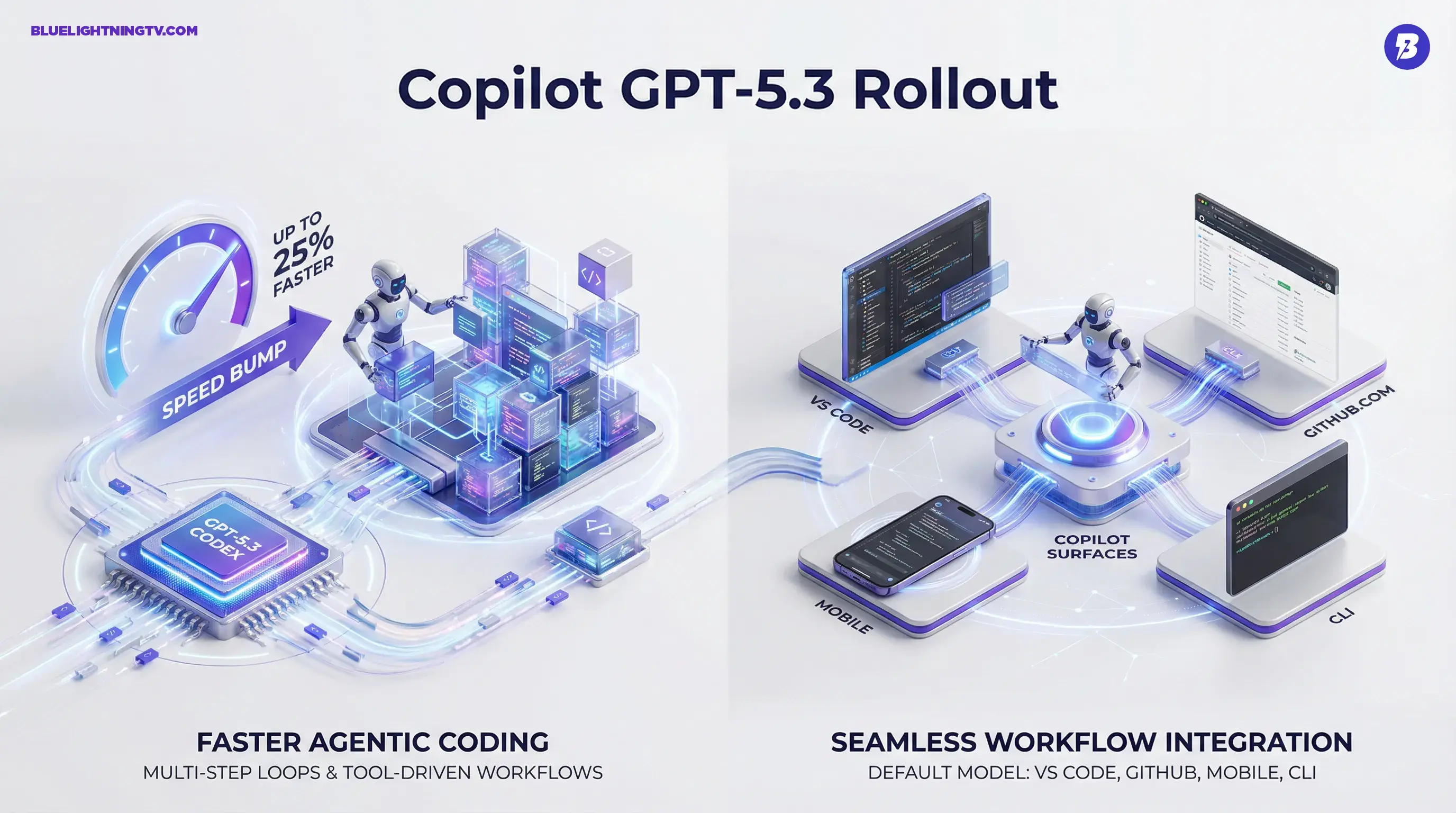

The headline upgrade is not vibes. It is practical: better performance in long-running, tool-driven workflows, plus a speed bump (up to 25% faster on agentic coding tasks) that matters when you are running multi-step loops all day. OpenAI’s release post frames GPT-5.3-Codex as a step forward for agentic work and highlights its security posture here: Introducing GPT-5.3-Codex.

What actually shipped

GitHub is not just adding a new option. It is making GPT-5.3-Codex available through Copilot’s model picker, and GitHub says it will become the default model for many users as rollout completes.

GitHub’s changelog highlights two claims that matter in production:

- Up to 25% faster vs. GPT-5.2-Codex on agentic coding tasks

- Improved reasoning and execution in complex, tool-driven workflows

This is the difference between a model that drafts code and a model that helps you keep momentum when the task becomes: edit these files, update tests, follow tool output, and do not break the build.

Where you’ll see it

GPT-5.3-Codex is available across the Copilot surfaces people actually use.

Supported Copilot surfaces

Per GitHub, you can use it in:

- VS Code (chat, ask, edit, agent modes)

- GitHub.com

- GitHub Mobile

- GitHub CLI

- Copilot coding agent

GitHub also notes rollout is gradual, so if you do not see it immediately in your picker, it is just rollout logistics.

Who gets access

GitHub lists availability for:

- Copilot Pro

- Copilot Pro+

- Copilot Business

- Copilot Enterprise

For Business and Enterprise, GitHub notes that admins need to enable access via Copilot policy and settings before everyone can use it.

Why “agentic” matters now

If you have been using AI coding tools, you already know the hard part is not generating code. The hard part is everything around it.

Agentic coding is where the model has to hold up while doing things like:

- navigating unfamiliar repos

- making multi-file changes without losing the plot

- iterating after tool output (tests, linters, build errors)

- maintaining a plan across steps instead of just reacting

The real win is not that it writes code. It is that it keeps going when the job becomes a sequence.

GPT-5.3-Codex is positioned as an improvement specifically for that sequence work, which is exactly where creators and small teams feel bottlenecks: shipping landing pages, instrumenting analytics, spinning experiments, and building internal tools under deadline pressure.

The speed claim, unpacked

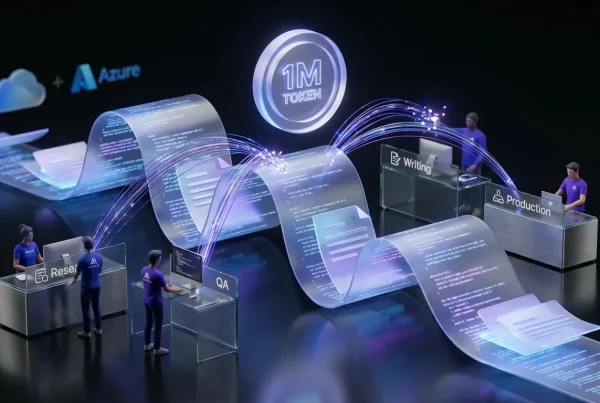

“Up to 25% faster” can sound like marketing math until you map it to an actual workflow. In agentic systems, latency compounds because the model is not responding once, it is responding dozens of times.

A typical agent loop might look like:

- interpret task

- inspect repo structure

- propose plan

- implement changes across files

- run tests

- read failures

- patch

- re-run

- summarize or open PR

Shaving time off each step means fewer context switches for humans, less waiting tax, and more realistic use of agents for medium-sized tasks.

What changes for creators

Yes, this is a coding model. But a lot of creators are code-adjacent now: shipping on Webflow plus custom scripts, building Shopify add-ons, running analytics pipelines, automating content packaging, or launching microsites that need to work fast.

Real workflows it impacts

Here is where Copilot plus a stronger agentic model tends to pay off quickly:

- Campaign pages and microsites: scaffolding, styling, forms, and basic QA in one flow

- Tracking instrumentation: adding events consistently across components without missing edge cases

- Content ops utilities: CSV cleaners, caption formatters, metadata validators, batch renamers

- Rapid prototyping: interactive demos that otherwise die in the “no time” folder

Humans still own taste and final decisions. AI reduces repetitive build friction.

A quick capability snapshot

| Area | What’s new with 5.3 | Why it matters |

|---|---|---|

| Agent loops | Better long-running execution | Less stalling mid-task |

| Performance | Up to 25% faster | Shorter plan to fix cycles |

| Availability | Copilot-wide rollout | Less setup friction |

The security note (without the doom)

OpenAI says GPT-5.3-Codex is classified as “High” capability for cybersecurity-related tasks under its Preparedness Framework and has been trained to identify software vulnerabilities, with additional safety measures described in its release materials.

That matters for two reasons:

- It acknowledges that coding agents are not just autocomplete, misuse risk is real.

- It hints at a parallel product goal: better defensive code review behavior inside everyday tools.

For teams, the practical framing stays the same: review changes, run tests, and treat AI output like junior dev output that can be great, but not magic.

What to watch next

GPT-5.3-Codex landing inside Copilot is also a signal about where this category is going near-term:

- Model choice becomes workflow choice: you pick models based on debugging, refactoring, or running an agent through a multi-step ticket. GitHub maintains a living list here: Supported AI models in GitHub Copilot.

- Agents become normal: not for everything, but for the string of chores that slows shipping.

- Tooling UX matters as much as model quality: progress visibility and control determine adoption.

Bottom line

GPT-5.3-Codex being generally available in GitHub Copilot is a practical upgrade: faster agentic loops, stronger performance in tool-driven workflows, and broad availability where people already build. It does not remove the need for taste, review, or ownership, but it does reduce the grind between idea and merged PR.

If you are already living in Copilot, this is not a new habit. It is the same workflow with less drag.