Anthropic Releases Claude Haiku 4.5 for Blazing-Fast, Low-Cost Development

Anthropic has officially released Claude Haiku 4.5, its lightweight Claude 4.5-series model built for sub-second responses and budget-conscious deployment. The model is now generally available on Claude.ai, the Claude Developer Platform, Amazon Bedrock, and Google Cloud Vertex AI, with Anthropic positioning Haiku 4.5 as a near-frontier performer at a fraction of typical enterprise AI costs.

What’s new in Claude Haiku 4.5

Claude Haiku 4.5 is designed to anchor real-time, high-volume creative and operational workflows, such as customer support at scale, live creative editing, and fast-turn prototyping for apps, games, and interactive experiences where speed and price determine feasibility.

Performance and coding signals

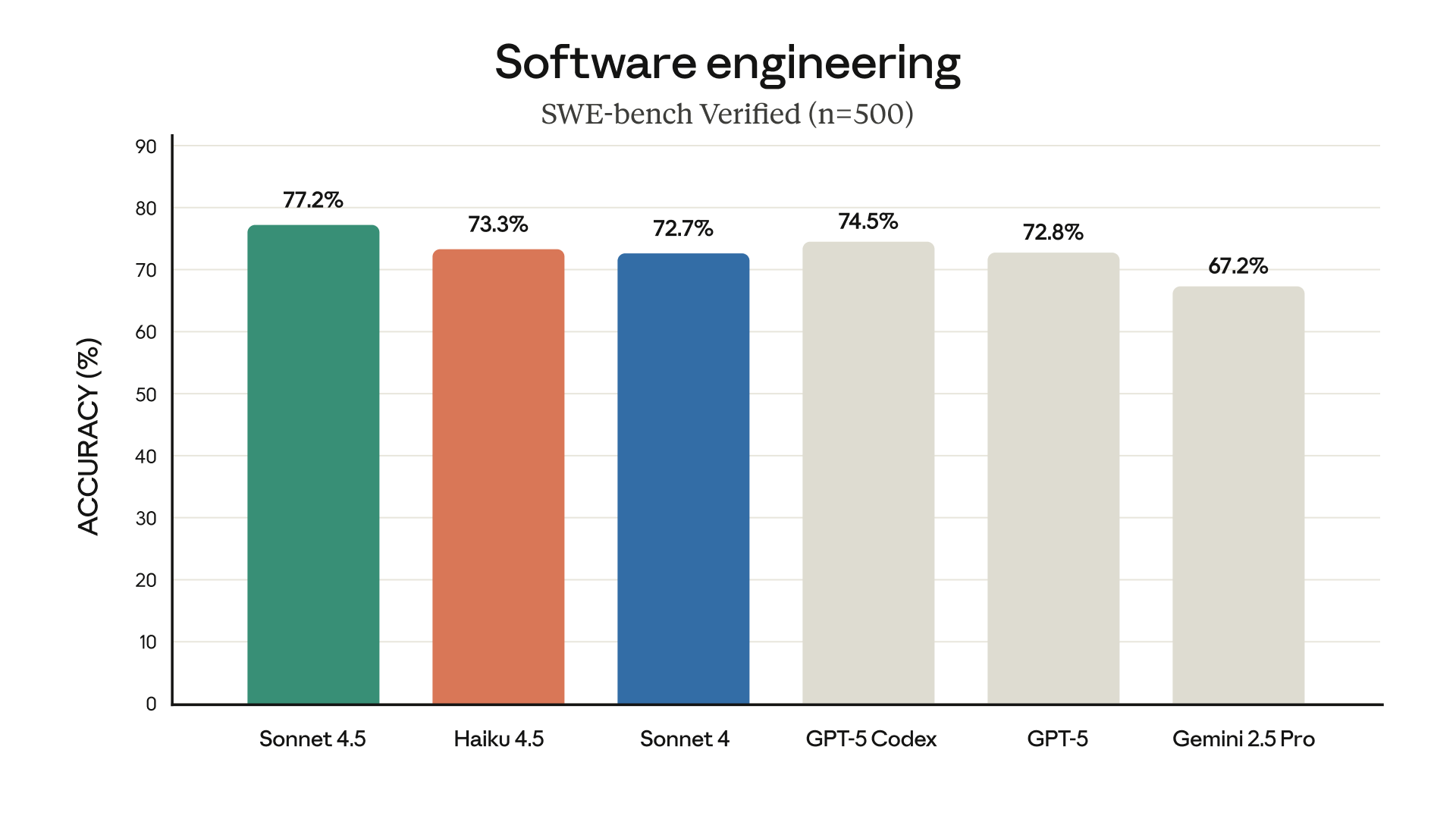

Anthropic reports a 73.3% score on SWE-bench Verified, a benchmark that evaluates models on fixing real-world code issues across open-source repositories. The company says Haiku 4.5 approaches the coding, computer-use, and agent-task performance of its larger models while remaining dramatically faster and cheaper, positioning it for always-on assistants and automation-heavy workloads.

Latency and responsiveness

Haiku 4.5 targets sub-second first-token latency on typical prompts, supporting responsive chat, voice-aligned experiences, and real-time creative iteration. For creators, that reduces waiting between prompts and outputs, useful when generating multiple variations of scripts, social copy, style directions, or artboard descriptions under time pressure.

For fast-moving creative teams and lean startups, the headline is simple: near-frontier capability without the frontier price, sized for assistants you can keep running all day.

Speed, pricing, and deployment levers

Anthropic is foregrounding total cost of ownership alongside raw performance. The company lists token pricing at approximately $1 per million input tokens and $5 per million output tokens for Haiku 4.5. Two cost-management features are called out for high-volume use:

- Prompt Caching: Reuse of repeated prompt contexts can cut costs substantially (Anthropic cites savings up to 90% in repetitive workflows).

- Message Batches API: For asynchronous jobs that tolerate delay, batch processing offers discounted rates (Anthropic cites up to 50% savings with longer processing windows).

Below is a quick snapshot of what’s shipping and where it fits for creators and marketing teams.

| Aspect | Claude Haiku 4.5 | Why it matters for creators |

|---|---|---|

| Model focus | Lightweight, high-speed, budget-friendly | Feasible to run assistants continuously during production sprints |

| Pricing (tokens) | ~$1/M input; ~$5/M output | Predictable at scale for content, support, and automation workloads |

| Latency | Sub-second first token (typical) | Real-time brainstorming and responsive creative flows |

| Benchmark signal | 73.3% on SWE-bench Verified | Strong coding/agent reliability for production tools |

| Deployment | Claude.ai, API, Bedrock, Vertex AI | Plug into existing cloud stacks and team habits quickly |

Where creators and startups are likely to use it

Anthropic highlights creator-forward scenarios where Haiku’s speed and price make an immediate difference to throughput and iteration:

- Real-time creative assist: Rapid brainstorming and editing loops for campaign assets, moodboards, product copy, and storyboards without long render or response delays.

- Prototyping and demos: Fast-turn interactive demos, micro-apps, and lightweight game loops, useful for pitch meetings, pilots, and A/B idea testing on tight timelines.

- Operational agents: Always-on support and workflow bots that summarize, route, and resolve repetitive queries across chat, email, and documentation, with larger models reserved for only the most complex escalations.

- Multi-agent systems: As a doer sub-agent in agentic chains, with planning staged by a larger model and execution carried out by Haiku for speed and cost efficiency.

Safety, alignment, and reliability claims

Anthropic says Haiku 4.5 operates under its AI Safety Level 2 (ASL-2) protocols, a designation the company uses for models with a lower risk profile across misuse categories. The company’s transparency materials outline evaluation areas such as cooperation with misuse, compliance with harmful instructions, and deception. More on the framework is available via Anthropic’s transparency hub.

Takeaway for teams

ASL-2 is intended to enable broader deployment with consistent guardrails, relevant for brands and agencies adopting AI across customer touchpoints where reliability and content safety are non-negotiable.

Availability and platform support

Anthropic lists immediate access across:

- Claude.ai: Web and mobile for end users seeking a GUI-backed creative partner.

- Claude Developer Platform (API): Direct integration for apps, assistants, and internal tools.

- Amazon Bedrock and Google Cloud Vertex AI: Enterprise integration for compliance-minded teams with existing cloud workflows.

Price controls like prompt caching and batched jobs indicate Anthropic is building for operations, not just demos, where teams must manage spend without sacrificing responsiveness.

Industry context: benchmarks and the fast model race

Haiku 4.5 arrives amid a sprint to redefine what a small or light model can do in production. The model’s SWE-bench Verified score places it in a competitive pack for code generation and issue resolution, areas increasingly important to creators who automate repetitive tasks or scaffold project foundations. The bar chart above situates Haiku among top coding models, with Anthropic emphasizing speed to first token and cost per output as decisive dimensions for day-to-day creative operations.

Also launching: Alibaba’s Qwen3-Omni targets reasoning and multi-modal agents

Alongside Anthropic’s release, Alibaba Cloud introduced the Qwen3 lineup’s latest flagship, Qwen3-Omni, a natively omni-modal model trained end to end across text, images, audio, and video. In its announcement, Alibaba cites state-of-the-art results on 32 evaluation suites and top rankings on 22 of 36 audio and audio-visual benchmarks, with streaming text and natural-speech output designed for hands-free experiences.

Early positioning puts Qwen3-Omni in the lead cohort for:

- Complex reasoning and math: Multi-step execution and planning tasks.

- Agentic pipelines: Tool use and sequential decision-making for research, coding, and workflow automation.

- Voice and multimodal UX: Real-time speech, AV input, and mobile form factors.

Alibaba says Qwen3-Omni is available for immediate trial through Model Studio and APIs, enabling teams to test agent behaviors and multimodal flows without upfront commitment.

Side-by-side snapshot

| Model | Primary Strength | Standout Signals | Access | Cost Notes |

|---|---|---|---|---|

| Claude Haiku 4.5 | Speed and affordability for always-on assistants | 73.3% SWE-bench Verified; sub-second responses (typical) | Claude.ai, API, Amazon Bedrock, Google Vertex AI | ~$1/M input; ~$5/M output; prompt caching; batch discounts |

| Qwen3-Omni | Omni-modal reasoning and streaming speech | SOTA on selected AV benchmarks; real-time text + voice output | Alibaba Cloud Model Studio and APIs | Free trials via Model Studio; commercial terms via Alibaba Cloud |

What this means for creators, founders, and brand teams

Two directions are crystallizing across the AI stack:

- Speed-first, cost-controlled engines: Claude Haiku 4.5 signals that lightweight models can shoulder a large share of production workloads, including content iteration, support automation, and agent execution, without prohibitive spend.

- Omni-modal, agent-forward systems: Qwen3-Omni underscores momentum toward voice-native interfaces and multi-step, tool-using agents that operate across text, images, and audio/video in real time.

For fast-moving teams, the practical implication is portfolio strategy: deploy a high-speed, low-cost workhorse for everyday throughput, and evaluate an omni-modal model where voice, video, or complex multi-step reasoning is central to the experience.

Availability and links

– Claude Haiku 4.5 announcement and expanded details: anthropic.com/news/claude-haiku-4-5

– Claude Haiku 4.5 model page and pricing: anthropic.com/claude/haiku

– Anthropic’s safety and ASL framework: anthropic.com/transparency

– Qwen3-Omni announcement overview: alibabacloud.com/blog/602581

– Qwen access via Model Studio: alibabacloud.com/product/modelstudio

Bottom line: For creators and lean teams, Haiku 4.5 establishes a new operating baseline for speed and affordability in daily production. In parallel, Qwen3-Omni’s omni-modal focus pushes voice and multi-step agentic work closer to real-world deployment. The market is converging on practical, scalable tools built to keep pace with the realities of creative and brand execution.